Remote IoT Batch Job Example Remote Since Yesterday: What Your Data Tells You

Have you ever thought about how all those tiny sensors and smart devices out there send their information back home? It's a big job, really. Sometimes, you need to gather up all that information, especially when it's been collecting for a while, perhaps since yesterday. This is where a remote IoT batch job example remote since yesterday since yesterday really comes into its own, helping you make sense of things that happened a little while ago. It's quite a clever way to handle a lot of incoming details, actually.

So, you know, when devices are far away, sending little bits of data all the time, it can get a bit much to deal with each piece as it arrives. Imagine, like, a whole bunch of weather stations in different places, all sending temperature readings. You might not need to know the exact temperature at every single second, but you probably want to see what the temperature was doing over the whole day, say, from yesterday morning until now. This is a common situation, and it's where setting up a remote IoT batch job example remote since yesterday since yesterday becomes incredibly useful, you know.

This kind of setup, a remote IoT batch job example remote since yesterday since yesterday, lets you gather up all that collected data in one go. It's like collecting all the mail from your mailbox at the end of the day instead of running out every time a new letter arrives. This approach is pretty efficient for looking at trends or seeing if anything unusual happened over a period of time, which is often the case when you are dealing with information that has been building up since yesterday, too it's almost.

- The Farmhouse Kansas City

- Alex Muyl

- Harwich Cape Cod Massachusetts

- Pacific City Or Weather

- Hollywood Beach Marriott

Table of Contents

- Understanding Remote IoT Data

- What is a Batch Job, Anyway?

- Why Process Data from Yesterday?

- Setting Up Your Remote IoT Batch Job

- Benefits of This Approach

- Common Challenges and How to Handle Them

- Tools and Systems You Might Use

- Making Sense of the Results

- Frequently Asked Questions

Understanding Remote IoT Data

When we talk about "remote IoT data," we are really talking about information that comes from devices that are not right next to you. These could be sensors in a far-off farm field, smart meters in people's homes, or even little trackers on delivery trucks. Each of these devices, you know, collects some sort of information, like temperature, movement, or how much energy is being used. This information then needs to travel from that remote spot to a central place where it can be looked at. It's a pretty interesting setup, really, how all that works.

These devices are often quite small and run on very little power, so they are not always sending data continuously. Sometimes, they send bursts of information, or they might store up data and send it all at once when they get a good connection. This makes it a bit different from, say, watching a live video stream, which needs constant flow. For many uses, getting the data in chunks, especially if you are interested in what happened since yesterday, is perfectly fine, and in some respects, even preferred.

The sheer amount of information these remote gadgets can generate is quite something. Think about hundreds, or even thousands, of devices each sending a few readings every hour. Over a full day, that adds up to a lot of data points. So, you know, dealing with this volume efficiently is a big part of the puzzle. It's why people look for smart ways to handle it all, especially if they are trying to get a clear picture of what happened, like, since yesterday morning.

- Weather Forecast Madison Al

- Lizbeth Rodriguez Onlyfans

- Rishabh Pant

- Lumon Industries

- James Baldwin Quotes

What is a Batch Job, Anyway?

A "batch job" is, well, it's a way of running a set of computer tasks automatically without needing someone to watch over it every second. You basically tell the computer, "Here are a bunch of things to do, go do them all, one after another, or maybe some at the same time." This is usually for tasks that don't need immediate human interaction. For example, processing a payroll at the end of the month is a classic batch job. You collect all the hours worked, and then the system calculates everyone's pay in one go. It's pretty straightforward, actually.

The idea is that you gather up all the necessary information first, and then you process it all together. This is different from "real-time" processing, where you deal with each piece of information as it arrives. With a batch job, you wait until you have a whole "batch" of things to work on. This can be much more efficient for certain kinds of tasks, especially when you are dealing with a lot of data that has accumulated over time, like, since yesterday. So, it's a bit like doing all your errands for the week on one specific day, rather than going out every day for just one thing.

Batch jobs are great for tasks that can run in the background, like cleaning up old files, generating reports, or, in our case, looking at data collected from remote IoT devices. They can be set to run at specific times, say, every night, or when a certain amount of data has piled up. This means you can get a summary or analysis of your information without constantly checking things yourself, which is very helpful when you are trying to understand patterns that have emerged since yesterday.

Why Process Data from Yesterday?

Looking at data from "yesterday" is incredibly useful for many reasons, you know. It gives you a historical view, a snapshot of what was happening over a full day or perhaps even longer. For instance, if you have sensors monitoring the temperature in a cold storage unit, checking yesterday's data can show if there were any unusual spikes or dips that might have affected the stored items. This kind of historical look is pretty important for quality control, you see.

Also, processing data from a specific past period, like since yesterday, helps with identifying trends. Imagine you are tracking energy consumption in a building. By looking at yesterday's usage, and comparing it to the day before, or last week, you can spot patterns. Maybe energy use always goes up on Tuesday afternoons, or perhaps it dropped unexpectedly yesterday. These insights can help you make better decisions about how things are running. It's a way of learning from the past, so to speak, to improve the future.

Furthermore, batch processing data from yesterday is often used for reporting and compliance. Many businesses need to generate daily summaries or reports for internal review or for regulatory bodies. A batch job can automatically pull all the relevant data from the previous day, put it into a readable format, and even send it to the right people. This saves a lot of manual effort and makes sure that reports are consistent and accurate. It's just a more reliable way to get things done, basically, when you need a clear record of what happened.

Setting Up Your Remote IoT Batch Job

Setting up a remote IoT batch job example remote since yesterday since yesterday involves a few key steps. It's not overly complicated once you break it down, but each part plays a pretty important role in making sure you get the data you need. The goal is to get information from far-off devices, bring it all together, and then do something useful with it, especially focusing on what has happened over the last day. So, you know, let's go through the pieces.

Data Collection and Storage

The first step is getting the data from your remote IoT devices. These devices typically send their information to a central point, often called an IoT platform or a data hub. This hub is designed to receive information from many different sources at once. It's like a big post office box for all your device messages. This information usually comes in a raw format, just numbers and codes, but it gets collected there, which is pretty neat.

Once the data arrives, it needs a place to live. This is where data storage comes in. For batch jobs, you'll often put this raw data into a database or a data lake. These are places designed to hold large amounts of information efficiently. The data from "yesterday" will be sitting here, waiting for your batch job to come along and pick it up. It's like having a big warehouse where all the day's deliveries are stacked up, waiting to be sorted. This setup, you know, makes it easy to access everything when you need it.

It is important that this storage is reliable, because if the data isn't saved properly, your batch job won't have anything to work with. You want to make sure that every bit of information sent from your remote devices, especially all the bits that came in since yesterday, is safely tucked away. This foundational step is pretty crucial for everything else that follows, obviously.

Scheduling the Job

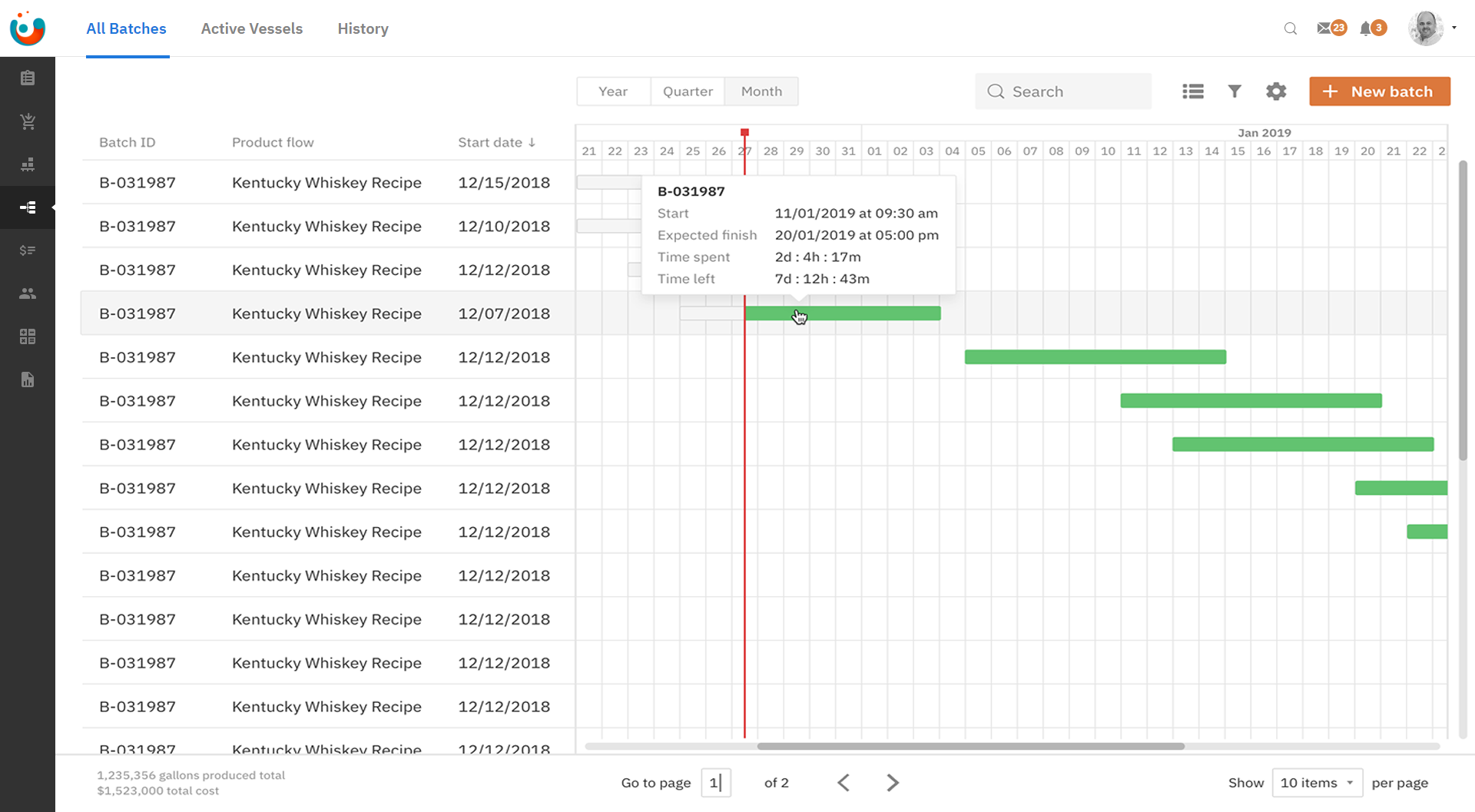

Once your data is safely stored, the next thing is to decide when your batch job will run. This is called "scheduling." You can set it up to run at a specific time every day, say, at 1 AM, after all of yesterday's data has definitely arrived and settled. Or, you might set it to run every few hours, or perhaps when a certain amount of new data has accumulated. The timing really depends on what you need the processed data for and how fresh it needs to be. For getting data from "since yesterday," a daily schedule usually works very well.

There are special tools that help you schedule these jobs. They make sure the job starts automatically, without you having to click a button every time. These schedulers are pretty smart; they can even restart a job if it fails, or send you a message if something goes wrong. This automation is a big part of what makes batch jobs so useful. It frees up your time, you know, so you don't have to manually kick off the process for yesterday's data every morning.

When you set up the schedule, you also tell the job what data to look at. For our remote IoT batch job example remote since yesterday since yesterday, you'd tell it to grab all the data that came in between, say, midnight two days ago and midnight yesterday. This time window is key to ensuring you are only processing the information from the period you are interested in. It's like telling a robot, "Go get all the blue boxes that arrived between Tuesday and Wednesday," rather than just "Go get boxes," which is pretty important.

Processing the Information

This is where the actual work happens. Once the batch job starts, it goes to your data storage, picks up all the relevant information from yesterday, and then performs whatever tasks you've told it to do. This could be anything from cleaning up messy data, combining different pieces of information, calculating averages, or looking for unusual readings. The specific steps here really depend on what you want to learn from your IoT devices. It's quite flexible, in a way.

For instance, if your remote IoT devices are temperature sensors, the batch job might calculate the average temperature for each sensor over yesterday. It might also find the highest and lowest temperatures recorded. If a temperature went outside a safe range, the job could flag that as an alert. All these operations are done on the whole batch of data at once, which is why it's called a batch job. It's much more efficient than looking at each individual temperature reading as it comes in, you know.

The output of this processing can be stored in another database, sent to a reporting tool, or even trigger an alert if something critical is found. The idea is to transform the raw data into something meaningful and actionable. This step is where the value of looking at data since yesterday truly comes to light, because you get a clear, summarized picture of what happened, rather than just a stream of numbers. It's pretty cool how it all comes together, actually.

Benefits of This Approach

Using a remote IoT batch job example remote since yesterday since yesterday offers quite a few good things. One big benefit is efficiency. Instead of constantly reacting to every tiny piece of data as it arrives, you can process large chunks of information all at once. This uses computer resources more effectively, especially during off-peak hours when systems might be less busy. It's like doing your laundry once a week with a big machine instead of washing each item by hand as you wear it, which is pretty smart.

Another strong point is data consistency and accuracy. When you process data in batches, you can apply the same rules and calculations to all the information from a specific period, like everything that came in since yesterday. This helps make sure your reports and analyses are uniform and reliable. You avoid issues that might come from processing data at different times with potentially different system states. It's a way to keep things very orderly, you know.

Furthermore, this method is excellent for historical analysis and trend identification. By regularly processing yesterday's data, you build up a rich history of how your remote IoT systems are performing. This historical record is invaluable for spotting long-term trends, predicting future needs, or even finding the root cause of problems that might have started a while ago. It gives you a much fuller picture than just looking at what's happening right now. You can learn more about on our site, which is quite helpful.

It also helps with managing network traffic. Instead of devices constantly sending small bits of data, which can sometimes overwhelm networks, a batch approach can allow for more controlled data transfer. Or, the batch job itself might run on a server that pulls data at specific times, reducing continuous strain. This can be particularly useful for remote locations where internet connectivity might be a bit spotty or expensive. It's a very practical way to handle things, in some respects.

Common Challenges and How to Handle Them

While remote IoT batch jobs are pretty useful, they do come with their own set of things to watch out for. One common challenge is the sheer volume of data. As more devices come online, the amount of information collected can grow very quickly. This can strain your storage systems and make processing times longer. So, you know, you need a plan for how to scale up your systems as your data grows. It's like trying to fit more and more books onto a bookshelf that's already full.

Another thing to consider is data quality. Sometimes, remote devices might send incomplete or incorrect readings. This "dirty data" can mess up your batch job's results. You need ways to clean and validate the information before it's processed. This might involve setting up rules to filter out bad readings or filling in missing gaps. It's a bit like sorting good apples from bad ones before you make a pie, which is pretty important for a good outcome.

Network reliability can also be a hurdle. If your remote devices can't consistently send their data to the central storage, then your batch job won't have all the information it needs from yesterday. You might need to build in ways for devices to store data locally and retry sending it later, or have mechanisms to detect when data is missing. This is especially true for devices in very remote areas where internet access might be, like, quite limited. It's a real consideration, you know.

Finally, making sure the batch job runs on time and finishes successfully can sometimes be a bit tricky. Jobs can fail for various reasons – a server might go down, or there might be a bug in the processing code. Having good monitoring in place that tells you if a job failed, and tools to help you fix it quickly, is very important. This ensures you always get your daily reports and insights from yesterday's data without too much fuss, which is honestly just a good practice.

Tools and Systems You Might Use

To put together a remote IoT batch job example remote since yesterday since yesterday, you'll typically use a combination of different tools and systems. For collecting data from the IoT devices, you might use an IoT platform. These platforms are designed to handle connections from many devices, manage their identities, and securely receive their data. They are like the front door for all your device information, you know, making sure everything gets in safely.

For storing the large amounts of data, you would probably use a cloud storage service or a specialized database that can handle big data. These systems are built to store vast quantities of information efficiently and make it easy to retrieve specific chunks, like all the data from yesterday. They are pretty robust and can grow as your data needs grow, which is very convenient. You can learn more about this page for additional details.

For the actual batch processing, you might use a data processing framework. These are software tools that help you write the code to clean, transform, and analyze your data. They are designed to work with large datasets and can often distribute the work across multiple computers to speed things up. It's like having a big factory with many machines that can all work on different parts of the same job at the same time, which is really efficient.

And then, for scheduling and orchestrating the batch jobs, there are job schedulers or workflow management tools. These are what make sure your batch job runs at the right time, in the right order, and can handle any errors that might pop up. They are the conductors of your data processing orchestra, making sure every instrument plays its part when it should. So, you know, these tools work together to give you a complete picture of your remote IoT data, especially when you are looking back at what happened since yesterday.

Making Sense of the Results

Once your remote IoT batch job example remote since yesterday since yesterday has finished its work, you'll have a set of processed data or reports. This is where the real value comes in: turning those numbers into insights. For example, if your batch job was looking at energy usage from remote sensors, the processed data might show you which machines used the most power yesterday, or if there was an unexpected surge in consumption during the night. This kind of information is pretty helpful for making decisions, obviously.

You might use data visualization tools to create charts, graphs, or dashboards from the processed data. Seeing the information visually can make it much easier to spot trends, anomalies, or areas that need attention. A line graph showing temperature fluctuations throughout yesterday is often much clearer than a long list of numbers. It helps you grasp the story the data is telling you very quickly, which is a big plus.

These insights can lead to actions. Perhaps you discover that a specific remote device is consuming too much power, or that a temperature sensor reported an out-of-range reading yesterday. Based on this, you might decide to send a technician to check the device, adjust settings, or investigate further. The goal is to move from raw data to actionable intelligence, improving how your remote IoT systems operate. It's a continuous cycle of collecting, processing, and then acting on information, which is pretty much how things work, at the end of the day.

Frequently Asked Questions

People often have questions about how these systems work, especially when thinking about getting information from far-off devices and processing it later. Here are a few common ones, you know, that might pop up.

What is a remote IoT batch job?

A remote IoT batch job is basically a computer program that runs automatically to process a large collection of information gathered from devices that are located in distant places. It doesn't deal with each piece of information as it arrives, but rather waits until a whole group of data, a "batch," has been collected. Then, it processes all that information together. This is usually done for things like creating reports or doing analysis on historical data, like everything that came in since yesterday, which is pretty common.

How do you process IoT data from yesterday?

To process IoT data from yesterday, you would typically set up a scheduled batch job. This job would be configured to access the data storage where all your remote IoT device information is kept. It would then specifically select all the data that arrived within the time frame of yesterday, perhaps from midnight to midnight. Once that specific set of data is gathered, the job applies various steps to it, such as cleaning, summarizing, or analyzing, to give you useful insights about what happened during that period. It's a very systematic way to look back at things, you know.

Why use batch jobs for

Remoteiot Batch Job Example Remote Aws Developing A Monitoring

How to Write a Résumé for a Remote Job (with Examples and Templates

Batch Processing Operating System - Scaler Topics